What Is CMAF? (Update)

The battle to reduce video latency while maintaining a quality viewer experience had been leading to widespread adoption of the aptly named Common Media Application Format (CMAF). This protocol is the product of a coordinated effort to promote efficiency and reduce latency industry-wide. Even with Apple’s recent contender threatening to undermine this effort, CMAF still holds a lot of potential for anyone looking to simplify and speed up the streaming process. So, what exactly is CMAF and how can it benefit your streaming efforts?

Table of Contents

What is CMAF?

Common Media Application Format or CMAF is a relatively new format for packaging and delivering various forms of HTTP-based media. This standard simplifies the delivery of media to playback devices by working with both the HLS and DASH protocols to package data under a uniform transport container file. It also employs chunked encoding and chunked transfer encoding to lower latency. This results in lower costs for most businesses thanks to reduced storage needs and reduced risk of lost business due to video latency.

It’s worth noting that CMAF is itself not a protocol but rather a container and set of standards for single-approach video streaming that works with protocols like HLS and MPEG-DASH. In this way, the greatest accomplishment of CMAF streaming is more political than technical in that it represents an industry-wide effort to improve streaming across the board.

CMAF Snapshot

| Purpose: | Goals: | Timeline: |

|

|

|

Why Do We Need CMAF?

Competing codecs, protocols, media formats, and devices make the already complex world of live streaming infinitely more so. In addition to causing a headache, the use of different media formats increases streaming latency and costs.

What could be achieved with a single format for each rendition of a stream instead requires content distributers to create multiple copies of the same content. In other words, the streaming industry makes video delivery unnecessarily expensive and slow.

The number of different container files alone is exhaustive: .ts, .mp4, .wma, .mpeg, .mov… the list goes on. But what if competing technology providers could agree on a standard streaming format across all playback platforms? Enter the Common Media Application Format, or CMAF.

CMAF brings us closer to the ideal world of single-approach encoding, packaging, and storing. What’s more, it promises to drop end-to-end delivery time from 30-45 seconds to less than three seconds.

How, why, and to what extent is this possible? Read on for the nitty-gritty.

Leading up to CMAF

Back in the day, RTMP (Real-Time-Messaging Protocol) was the go-to method for streaming video over the internet. This proprietary protocol enabled lickety-split video delivery but encountered issues getting through firewalls.

As many browsers began to phase out support for Flash, the industry shifted to HTTP-based (Hypertext Transfer Protocol) technologies for adaptive bitrate streaming. For Apple, this took the form of Apple HLS (HTTP Live Streaming); whereas MPEG-DASH (Dynamic Adaptive Streaming over HTTP) became the international standard.

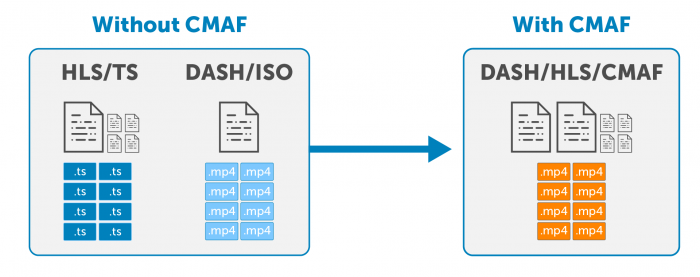

But with different protocols came different file containers. HLS specifies the use of .ts format, while DASH almost uniformly uses .mp4 containers based on ISOBMFF.

That was a lot of acronyms, we know. The takeaway here is that technology providers support distinct containers for streaming – which impacts playback across the myriad of devices used today.

The problem? Any content distributor wanting to reach users on both Apple and Microsoft devices must encode and store the same audio and video data twice. With users accessing streams across iPhones, smart TVs, Xboxes, and PCs, this obstacle didn’t go unnoticed.

Take Akamai’s word for it: “These same files, although representing the same content, cost twice as much to package, cost twice as much to store on origin, and compete with each other on Akamai edge caches for space, thereby reducing the efficiency with which they can be delivered.”*

*It’s worth noting that Streaming Media endorses Wowza as an alternative to duplicative storage and encoding costs: “You can avoid the increased storage and encoding costs by dynamically packaging your content from MP4 files to DASH, HLS, and Smooth Streaming format via […] products like the Wowza Streaming Engine.”

The Advent of CMAF

CMAF came about from cross-company collaboration. In February of 2016, Apple and Microsoft came to the Moving Pictures Expert Group (MPEG) with a proposal. By establishing a new standard called the Common Media Application Format (CMAF), the two organizations would work together to reduce complexity when delivering video online.

Players moved fast. Apple announced that it would add fragmented MP4 support to HLS on June 15, 2016. This meant that Apple would use .mp4 delivery for video streams — the very container that Microsoft already uses.

By July of 2017, co-developers had finalized specifications for CMAF. And in January 2018, the standard was published.

The benefits of encoding, packaging, and caching a single container for video delivery go without saying. But CMAF seeks to do more than just reduce complexity. Even after taking the reins from RTMP years ago, HTTP-based video delivery still lacks the real-time delivery options that viewers demand. Could CMAF also improve latency? With chunked encoding and chunked transfer encoding, the new specification set out to do just that.

How CMAF Works

Single Format: Before CMAF, Apple’s HLS protocol used the MPEG transport stream container format, or .ts (MPEG-TS). Other HTTP-based protocols such as DASH used the fragmented MP4 format, or .mp4 (fMP4).

Microsoft and Apple have now agreed to reach audiences across the HLS and DASH protocols using a standardized transport container — ISOBMFF in the form of fragmented MP4. Theoretically, this means that content distributors can deliver content using only the .mp4 container.

Chunked Encoding: CMAF streaming represents a coordinated industry-wide effort to lower latency with chunked encoding and chunked transfer encoding. This process involves breaking the video into smaller chunks of a set duration, which can then be immediately published upon encoding. That way, delivery can take place while later chunks are still processing. Think of it like getting your meal in courses instead of all at once. You could be enjoying a tasty appetizer while the kitchen prepares your next course instead of waiting hungrily while the meal “buffers.”

File Encryption and Digital Rights Management: Not unlike the issue of different media formats that CMAF sought to address, another incompatibility exists across the industry: digital rights management (DRM) and file encryption. Supporting multiple DRMs (FairPlay, PlayReady, and Widevine) means also supporting incompatible encryption modes.

Industry players have now agreed to reach a standard in this realm called Common Encryption (CENC), but standardization doesn’t happen overnight.

CMAF and HLS

Before we explore the distinction between CMAF and HLS streaming, we should first talk about HLS vs. Low-Latency HLS, the latter of which was developed by Apple after the advent of CMAF and seems to challenge the assumption that CMAF would become the industry-wide standard.

HLS (HTTP Live Streaming) is an Apple-based protocol that has historically favored stream reliability over latency concerns, clocking in at around a 5-20 second delay. It was Apple’s answer to problems with iPhone’s live streaming playback and successfully improved viewer experience with minimal buffering. CMAF can work with HLS for presentation profiles encrypted with ‘cbcs’ or ‘cenc’. The third presentation profile, unencrypted, is not supported.

Low-Latency HLS is a protocol extension for HLS that significantly reduces latency, answering many of the same needs as CMAF. Why Apple felt the need to extend the HLS streaming protocol when they already had the CMAF format working with it to reduce latency is a bit of a mystery and has some wondering how committed Apple is to the standardization effort.

With all this in mind, your options when using HLS are threefold:

- Traditional HLS with both high reliability and latency

- HLS with the Low-Latency HLS protocol extension to improve latency

- HLS with the CMAF format to improve latency and standardize container files

How fast is fast? The ranges for Low-Latency HLS and CMAF vary a bit. Low-Latency HLS typically runs about two to eight seconds behind. CMAF is said to run from roughly two to five seconds behind. In any case, the two are fairly neck-and-neck. However, CMAF has the added benefit of standardized container files (.fmp4), the widespread adoption of which will lead to fewer storage costs for all.

CMAF and RTMP

RTMP (Real-Time Messaging Protocol) was originally developed by Macromedia to work with Adobe’s Flash player, putting it at the forefront of streaming technology. However, it has since been sidelined in favor of HTTP-based technology.

Certainly, you can still use RTMP, but it works only as an ingest and is no longer really an option for playback. It also lacks the optimization of more recent technologies that make them scalable for higher quality viewing experiences.

That said, at a latency of around five seconds, businesses have been using it to stream quickly and reliably for a long time.

CMAF and WebRTC

Now let’s view the other end of the spectrum with the cutting-edge WebRTC (Web Real-Time Communications) protocol. It’s the fastest available live-streaming option and straight-forward to use. If CMAF streaming is low latency, then WebRTC is ultra-low latency (ULL) with sub-second latency. It’s also easy to adapt to different network requirements and free to use.

How does it accomplish all this? WebRTC adds real-time voice, text, and video communications between browsers and devices without the need for plugins. That said, it’s specifically designed for video conferencing and is therefore not scalable to larger audiences right out of the box. That’s where streaming platforms like Wowza can help. Our Real Time Streaming at Scale solution is functionally WebRTC with a workflow that helps it scale.

CMAF Summarized

One of the easiest ways to reduce screen-to-screen latency and the costs associated with storing multiple media file types is by simplifying video delivery. CMAF’s goals were threefold:

- Cut costs

- Minimize workflow complexity

- Reduce latency

Have these been achieved? Yes-ish.

CMAF will streamline server efficiency for serving most endpoints. And in recent demos, this new alternative enables sub-three-second latency.

That said, legacy devices and browsers that aren’t upgraded will still require unique container files for playback. Put another way, continuing to reach the broadcast audience possible requires additional accommodations (and costs) that CMAF can’t retrospectively address.

That’s where Wowza comes in. Wowza Streaming Engine supports video delivery across a variety of formats, including MPEG-TS, Apple HLS, and Adobe FTMP. This ensures video scalability as the adoption of CMAF-compliant devices continues to grow. What’s more, Wowza Streaming Engine now supports CMAF streaming – with low-latency optimization on the roadmap.

And if you’re looking for a streamlined solution that includes access to an easy-to-use CMS, try out our new and improved Wowza Video streaming platform.