The Role of WebRTC in Low-Latency Media Streaming

Adobe recently wrote a touching obituary to Flash, announcing 2020 as the date of passing. While we’ve all known the end was near, having a date has set us free to look seriously at other options.

One of the major advantages of using Flash was the media-streaming capabilities it offered, which rely on a protocol known as RTMP (Real-Time Messaging Protocol). With browsers already making it harder to use Flash, many content providers and streaming services have begun moving away from Flash and RTMP toward other options.

What Are the Available Alternatives?

Among the alternative are HLS (HTTP Live Streaming) and MPEG-DASH (Dynamic Adaptive Streaming over HTTP), which are quite similar in how they work. Their main use is for sending media to devices and browsers in situations where quality is more important than latency.

HLS and MPEG-DASH use a packetized delivery model, which cuts and reassembles “chunks” of video based on a manifest—and if some of those chunks get lost, they are retransmitted, so as to preserve the data quality. In turn, the implementations tend to buffer a bit, waiting until all the data is available before playing it to the user. The end result: The word “live” is lost in translation.

One route some are taking is to try and improve HLS and MPEG-DASH implementations, polishing and optimizing them to get to lower latencies. The other alternative available to us today is by adopting WebRTC (Web Real-Time Communication).

What Is WebRTC?

WebRTC is a free, open-source collection of communications protocols and APIs (Application Programming Interfaces). It was designed with bidirectional, real-time communications in mind. Part of its main requirements are that latency is kept as low as possible—because no one can conduct a real discussion when latency is one second or above.

So, what we want to do is to take the capabilities of WebRTC and repurpose them from a conversation into a one-way media stream. It is possible, but not always easy.

WebRTC: Higher Price Tag, Lower-Latency Streaming

The main difference between media streaming and live video calling is the intensity: Live video calling requires much more “presence” on both ends. The users interact with each other, and are aware of each other at all times. This means they are stateful in every possible way, which takes up computing resources.

Media streaming, on the other hand, doesn’t have this tight coupling. We have two separate sessions taking place: the broadcaster and the media server, and the media server and its viewers—each with its own set of rules, capabilities, optimizations and needs.

When you take a live video-calling technology and try to mold it over a media-streaming scenario, you usually get a scaling problem—one where the cost of scaling becomes prohibitive.

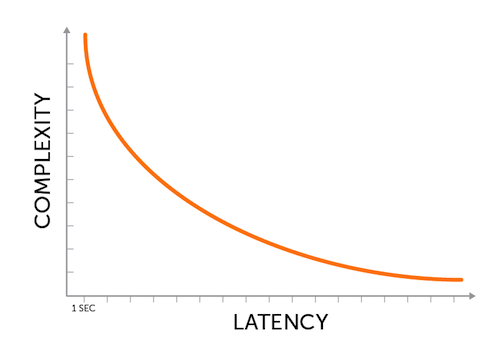

The reason is that extra resources are necessary to run WebRTC. This makes sense, because the lower the latency we want to achieve, the more energy and resources we will need to spend:

While using WebRTC is more expensive than, for example, going to HLS, it also means you’ll be able to achieve any low-latency requirements you may have for your live-streaming service.

The Future of WebRTC for Streaming Media

WebRTC has been with us for six years now. Most of this time, it has been used for video calling. Live-streaming professionals started to experiment with WebRTC two to three years ago, with solutions focusing mainly on the broadcasters.

For example, many webinar platforms that adopt WebRTC end up using it on the broadcaster’s side only, leaving the viewer’s side to a higher-latency technology. These solutions made use of open-source and commercial technologies that were mostly used for video calling.

With Flash dying, and new, more interactive types of live-streaming services on the rise, we are seeing new vendors and implementations that are making use of WebRTC in ways more suitable for streaming than for video calling. One example of this is Beam, a live-streaming gaming platform that was acquired and rebranded by Microsoft as Mixer.

These types of solutions reduce the level of intimacy needed between devices, below the statefulness of a video call, in order to increase scale and reduce costs. They no longer talk about SFUs (Selective Forwarding Units) and simulcast (though they might be using them), but rather, focus on acronyms such as ABR (Adaptive Bitrate), which are part and parcel of media streaming.

WebRTC is going to take a front-row seat in live-streaming media, but the ecosystem bringing it to bear will be different than the one focused on video calling. It will be interesting to see what the future holds for this emerging technology.