Comprehensive Guide to HLS Streaming: How HTTP Live Streaming Works

Table of contents

What is Streaming?

Streaming is the real-time delivery of audio and video over the internet, where users can consume content as it arrives rather than waiting for the entire file to download. This contrasts with traditional downloading, which requires a full file transfer before playback begins.

There are two primary types of streaming:

- Live streaming: Broadcasting content as it’s being created (e.g., sports, events).

- On-demand streaming: Pre-recorded content that users can access anytime (e.g., movies, TV shows).

Streaming leverages techniques like segmented delivery (like in HLS) and ABR (Adaptive Bitrate) streaming to ensure smooth playback even over unstable network conditions.

What is HTTP Live Streaming (HLS)?

HTTP Live Streaming (HLS) is a widely adopted media streaming protocol developed by Apple. First introduced in 2009, HLS was designed to deliver video and audio content over HTTP, making it ideal for delivery to Apple devices. Since then, its usage has expanded across virtually all modern platforms and devices, making it a go-to solution for scalable, internet-based streaming.

The protocol works by breaking video into small file segments that are downloaded and played sequentially. This segmented approach makes HLS highly reliable, adaptable to network conditions, and easy to deliver using existing HTTP infrastructure, including CDNs. HLS is now synonymous with both live and on-demand video streaming and continues to evolve, incorporating features like low-latency streaming, advertising, captions and DRM.

What is Adaptive Bitrate Streaming in HLS?

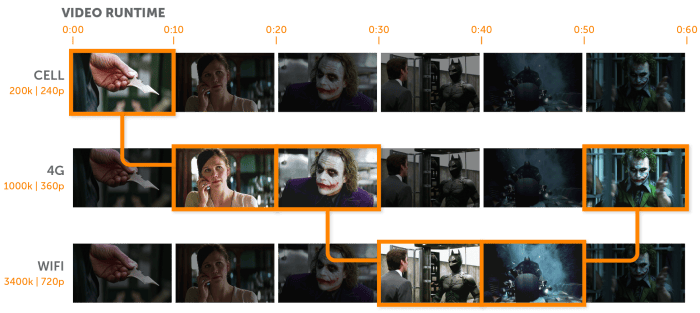

Adaptive bitrate (ABR) streaming is one of the key features that makes HLS so effective across a variety of devices and connection speeds. Instead of delivering a single stream to every viewer, ABR allows the player to dynamically switch between different bitrate renditions of the same video based on the viewer’s current network conditions.

This process relies on:

- Multiple renditions of the video, each encoded at different resolutions and bitrates.

- Real-time monitoring of bandwidth availability and device performance.

- Automatic switching between renditions without interrupting playback.

Benefits of ABR in HLS:

- Ensures a smooth viewing experience, even with fluctuating internet speeds.

- Reduces buffering and playback interruptions.

- Optimizes video quality for each individual user.

For example, a user on a strong Wi-Fi connection might receive a 1080p stream, while someone on mobile data may receive a 480p version—without ever noticing a manual switch.

Apple continues to refine ABR functionality with features like “Rendition Reports” and “Part Hold Back” introduced in LL-HLS to improve predictability and reduce latency.

How HTTP Video Streaming Works

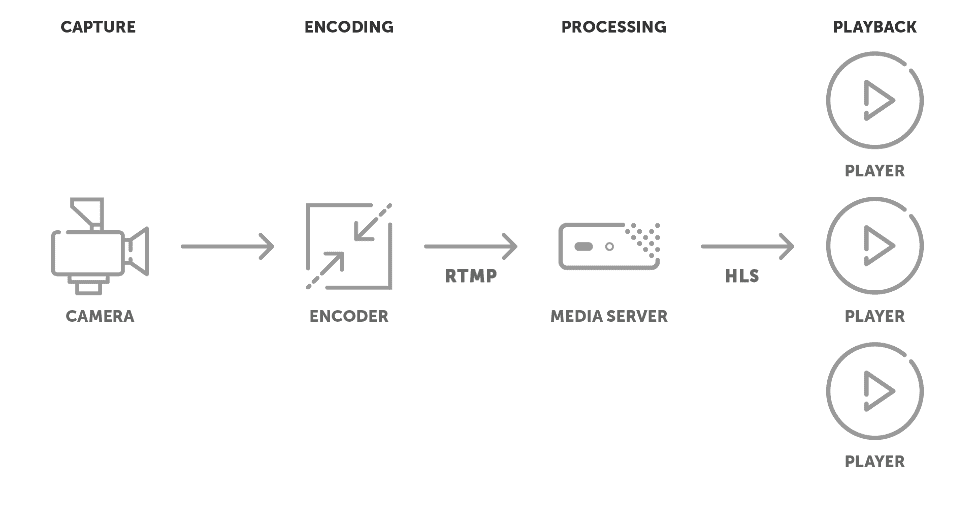

At a high level, HLS works by converting a continuous video stream into a series of smaller media files, called “segments.” These segments are then delivered to viewers over HTTP. A master playlist file (in M3U8 format for HLS) tells the player which segments are available and in what order to request them

Here’s a simplified view of the process:

- Encoding: The original video is encoded into multiple renditions at different bitrates and resolutions.

- Segmentation: Each rendition is broken into small segments (typically 2–6 seconds).

- Playlist Creation: An M3U8 playlist advertises the available renditions as lists of segments.

- Delivery: Viewers request segments via standard HTTP, and since the segments are discrete it allows them to be cached by CDNs.

- Playback: The media player downloads the optimal rendition based on network conditions and stitches the segments together in real time for seamless viewing.

Modern enhancements such as Low-Latency HLS (LL-HLS) reduce delay between capture and playback to just a few seconds, enabling real-time interaction for live events and sports broadcasts.

This process makes HLS incredibly adaptable to a wide variety of network environments and device types.

HTTP and Transport Protocols in HLS

When it comes to streaming video content over the internet, selecting the right protocols can significantly impact performance, reliability, and user experience. HTTP Live Streaming (HLS) is among the most widely adopted streaming protocols, renowned for its adaptability, reliability, and extensive device compatibility. Understanding the underlying protocols—such as HTTP for content delivery and TCP or UDP as transport mechanisms—is essential for optimizing your streaming strategy and ensuring high-quality playback for your audience. Let’s dive deeper into these critical components that make HLS a robust solution for delivering media at scale.

What is HTTP?

Hypertext Transfer Protocol (HTTP) is the foundational protocol used for transmitting data on the World Wide Web. It defines how messages are formatted and transmitted, and how web servers and browsers should respond to various commands. In the context of streaming, HTTP facilitates the delivery of media content over the internet, leveraging existing web infrastructure to ensure broad compatibility and scalability.

Does HLS Use TCP or UDP as its Transport Protocol?

HLS utilizes Transmission Control Protocol (TCP) as its transport protocol. TCP is connection-oriented, ensuring reliable delivery of data packets in the correct order, which is crucial for maintaining the integrity of streamed media. While User Datagram Protocol (UDP) offers faster data transmission by foregoing error-checking mechanisms, it can result in packet loss or out-of-order delivery, leading to a subpar viewing experience. Therefore, HLS’s reliance on TCP prioritizes reliability over speed, aligning with the need for consistent and high-quality media playback.

What Other Protocols Are Commonly Used for Streaming?

Several streaming protocols are widely used alongside HLS, each with unique features and applications:

- MPEG-DASH: An adaptive bitrate streaming protocol that, like HLS, segments content for efficient delivery, and natively supports timed metadata, captions and ad insertion MPEG-DASH is codec-agnostic and supported by various devices and platforms, making it a versatile choice for streaming, often alongside HLS.

- WebRTC: Designed for real-time communication, WebRTC enables peer-to-peer audio, video, and data sharing with minimal latency. It’s commonly used in applications like video conferencing and live interactive broadcasting.

- Common Media Application Format (CMAF): Developed by Apple and Microsoft, CMAF aims to standardize the data format for delivery of HTTP-based streaming media using either HLS or DASH. By reducing the need to store multiple data formats, CMAF simplifies workflows and decreases storage costs.

Bonus!

Real-Time Messaging Protocol (RTMP) : Initially developed by Macromedia (now Adobe), RTMP was a standard for live streaming and is known for low latency. However, its reliance on proprietary servers and the fact that it required a plugin for browser based playback as well as limited support on mobile devices have led to a decline in its usage as a playback protocol, it is however very popular as an ingest protocol.

Comparison of Common Streaming Protocols:

| Protocol | Latency | Compatibility | Use Cases |

| HLS | Moderate | Broad device support | Live and on-demand streaming |

| RTMP | Low | Limited mobile support | Live streaming (legacy), ingest |

| MPEG-DASH | Moderate | Wide device and platform compatibility | Live and on-demand streaming |

| WebRTC | Very Low | Browser-based applications | Real-time communication |

| CMAF | Moderate | Standardizes HLS and DASH workflows | Efficient multi-platform streaming |

Does Wowza Support HTTP Live Streaming?

Wowza offers robust support for HLS through its products:

- Wowza Streaming Engine: This customizable media server software enables streaming of live and on-demand content using HLS and Low-Latency HLS (LL-HLS). It supports advanced features such as adaptive bitrate streaming, DRM integration, ad insertion, and closed captioning, ensuring a comprehensive, low-latency streaming solution.

- Wowza Video: A cloud-based platform that simplifies live streaming workflows, providing scalable and reliable HLS delivery. It includes features such as real-time analytics, global content delivery, and low-latency streaming options.

Benefits of using Wowza for HLS streaming:

- Scalability: Efficiently handles large audiences by integrating with CDNs and leveraging adaptive bitrate streaming.

- Low Latency: Supports Low-Latency HLS (LL-HLS) to minimize delays between content capture and playback, enhancing viewer engagement.

- Reliability: Provides consistent and high-quality streaming experiences across various devices and network conditions.

For more information on Wowza’s HLS capabilities, visit our HLS Streaming Protocol page.

FAQs:

- What devices and browsers support HLS?

HLS is supported on a wide range of devices, including iOS and Android smartphones, tablets, smart TVs, and most modern web browsers like Edge, Chrome, and Firefox. - What Codecs does HLS Support?

HLS Supports H.264 (AVC), H.265 (HEVC), and AV1. The ability of HLS to support advanced codecs ensures the highest efficiency possible while still being able to target playback on any device. - Can HLS support 4K streaming?

Yes, HLS can deliver 4K content by encoding video at higher resolutions and bitrates, provided the user’s device and network can handle the increased data load. - Is HLS suitable for live events?

Absolutely. HLS is widely used for live event streaming due to its scalability and adaptability to varying network conditions. - How to implement low-latency HLS streaming?

Implementing Low-Latency HLS (LL-HLS) involves configuring your streaming server and player to support shorter segment durations and partial segment delivery, reducing the delay between capture and playback. - What are common issues with HLS streaming and how to troubleshoot them?

Common issues include buffering, latency, and playback errors. Troubleshooting steps involve optimizing segment sizes, ensuring proper encoding settings, and utilizing a robust CDN for content delivery.

Conclusion

HLS has become the gold standard for scalable, high-quality video streaming across the web. With support for adaptive bitrate streaming, compatibility with nearly every modern device, and growing capabilities like low-latency delivery, it’s no surprise that HLS is trusted by broadcasters, developers, and enterprises alike.

Whether you’re delivering on-demand content or broadcasting live events, Wowza’s end-to-end streaming solutions make it easy to leverage the full power of HLS. Get started today with a free trial or contact our team to discuss how we can help you build and scale your video streaming workflow.